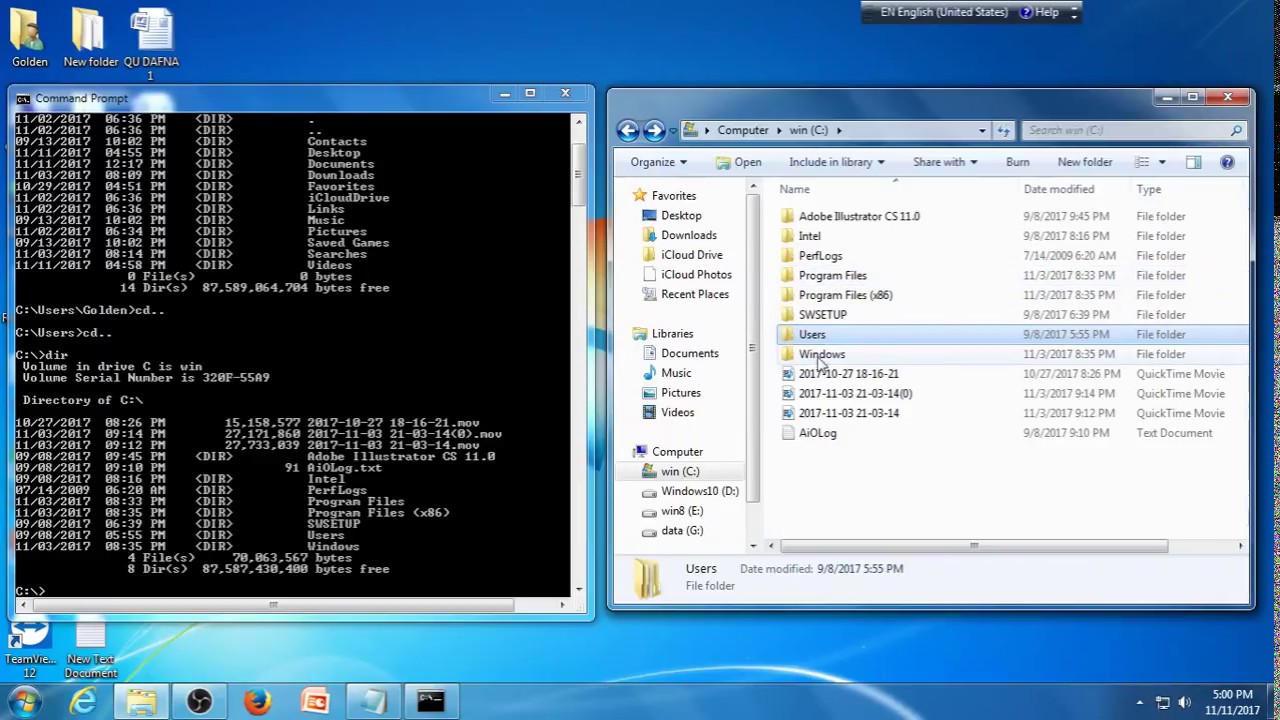

This is because the -ls command, without any arguments, will attempt to display the contents of the user’s home directory on HDFS. Running the -ls command on a new cluster will not return any results.

To list the contents of a directory in HDFS, use the -ls command:

Hadoop command line find file how to#

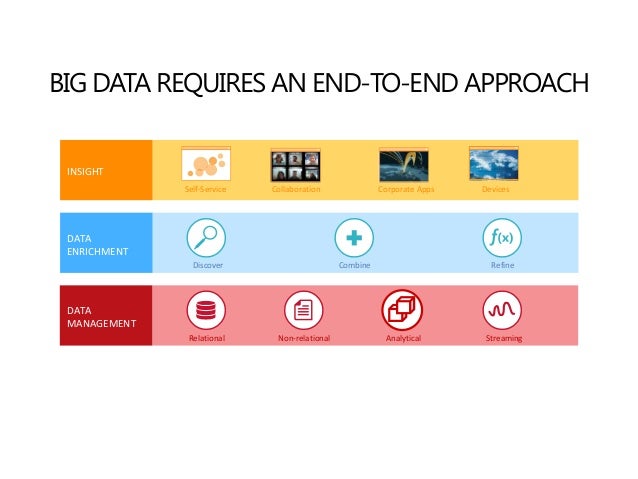

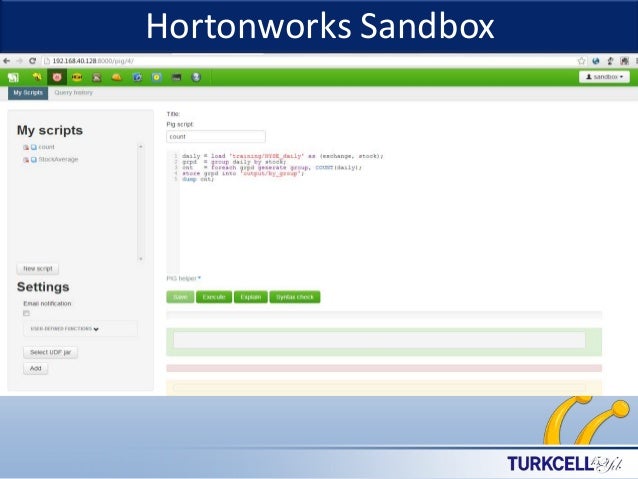

The following section describes how to interact with HDFS using the built-in commands. The example in Figure 1-1 illustrates the mapping of files to blocks in the NameNode, and the storage of blocks and their replicas within the DataNodes. When a DataNode fails, the NameNode will replicate the lost blocks to ensure each block meets the minimum replication factor. Unlike the NameNode, HDFS will continue to operate normally if a DataNode fails. DataNodes are typically commodity machines with large storage capacities. The machines that store the blocks within HDFS are referred to as DataNodes. Because the NameNode is a single point of failure, a secondary NameNode can be used to generate snapshots of the primary NameNode’s memory structures, thereby reducing the risk of data loss if the NameNode fails. The NameNode also tracks the replication factor of blocks, ensuring that machine failures do not result in data loss. To allow fast access to this information, the NameNode stores the entire metadata structure in memory. It stores metadata for the entire filesystem: filenames, file permissions, and the location of each block of each file. The NameNode is the most important machine in HDFS. The NameNode and DataNode processes can run on a single machine, but HDFS clusters commonly consist of a dedicated server running the NameNode process and possibly thousands of machines running the DataNode process. The architectural design of HDFS is composed of two processes: a process known as the NameNode holds the metadata for the filesystem, and one or more DataNode processes store the blocks that make up the files. After a few examples, a Python client library is introduced that enables HDFS to be accessed programmatically from within Python applications.

This chapter begins by introducing the core concepts of HDFS and explains how to interact with the filesystem using the native built-in commands. Block-level replication enables data availability even when machines fail. The default replication factor is three, meaning that each block exists three times on the cluster. HDFS ensures reliability by replicating blocks and distributing the replicas across the cluster. Files made of several blocks generally do not have all of their blocks stored on a single machine. Individual files are split into fixed-size blocks that are stored on machines across the cluster. This is accomplished by using a block-structured filesystem. HDFS is designed to store a lot of information, typically petabytes (for very large files), gigabytes, and terabytes. Where HDFS excels is in its ability to store very large files in a reliable and scalable manner. Like many other distributed filesystems, HDFS holds a large amount of data and provides transparent access to many clients distributed across a network. The design of HDFS is based on GFS, the Google File System, which is described in a paper published by Google.

Hadoop command line find file portable#

The Hadoop Distributed File System (HDFS) is a Java-based distributed, scalable, and portable filesystem designed to span large clusters of commodity servers.

0 kommentar(er)

0 kommentar(er)